Loading...

Loading...

CIID Thesis Project, 2025

Mentors: Chris Downs, Jose Chavarría, Raphael Katz

Project duration: 8 weeks

Art is a deeply human act rooted in intuition, creativity and lived experience. I've been exploring how we might use AI to create art that remains original and true to ourselves. Today's AI interfaces are text-based and feel like a blackbox, where my own essence gets lost in the process. Technology has always transformed art (paint tubes enabling landscapes, cameras sparking abstraction); how will art-making change with AI?

My thesis is a series of explorations following Louis Kahn's famous question: what does the brick AI want to be? A question about respecting materials and building structures which help them shine through. Through a dozen prototypes, I explored AI's material nature – as an interface, as a non-human intelligence, it is water-like elasticity; how it transforms as I give it different senses & abilities. What's the best way to collaborate with AI?

Noticing a pattern in my explorations led to Block, an AI collaborator for fine-artists. It has two senses (vision, hearing) & two abilities (speech, projection) – the most natural mediums to collaborate. The artist stays central to the process, while AI's meaninglessness expands their box of thinking.

I got to talk to some brilliant artists, and experimented in parallel to test what I was hearing. I focused on three things: their creative process, collaboration and working with AI.

AI isn't a popular teammate. I heard about how it lacked empathy, warmth, the sensitivity of a person. That's expected. But, Arnab and Emidio showed me the flip side – how AI's alternative understanding of the world can help expand one's box of thinking. Hallucinations as AI creativity.

There's a certain diverseness that AI brings. Where AI shines is how it takes you into interesting dimensions of the same idea where you're almost bouncing across.

Artist in Residence, CMU

What I found fascinating was that with AI, you always find flaws or inconsistencies that it can make, that can lead you to new paths.

Art Director

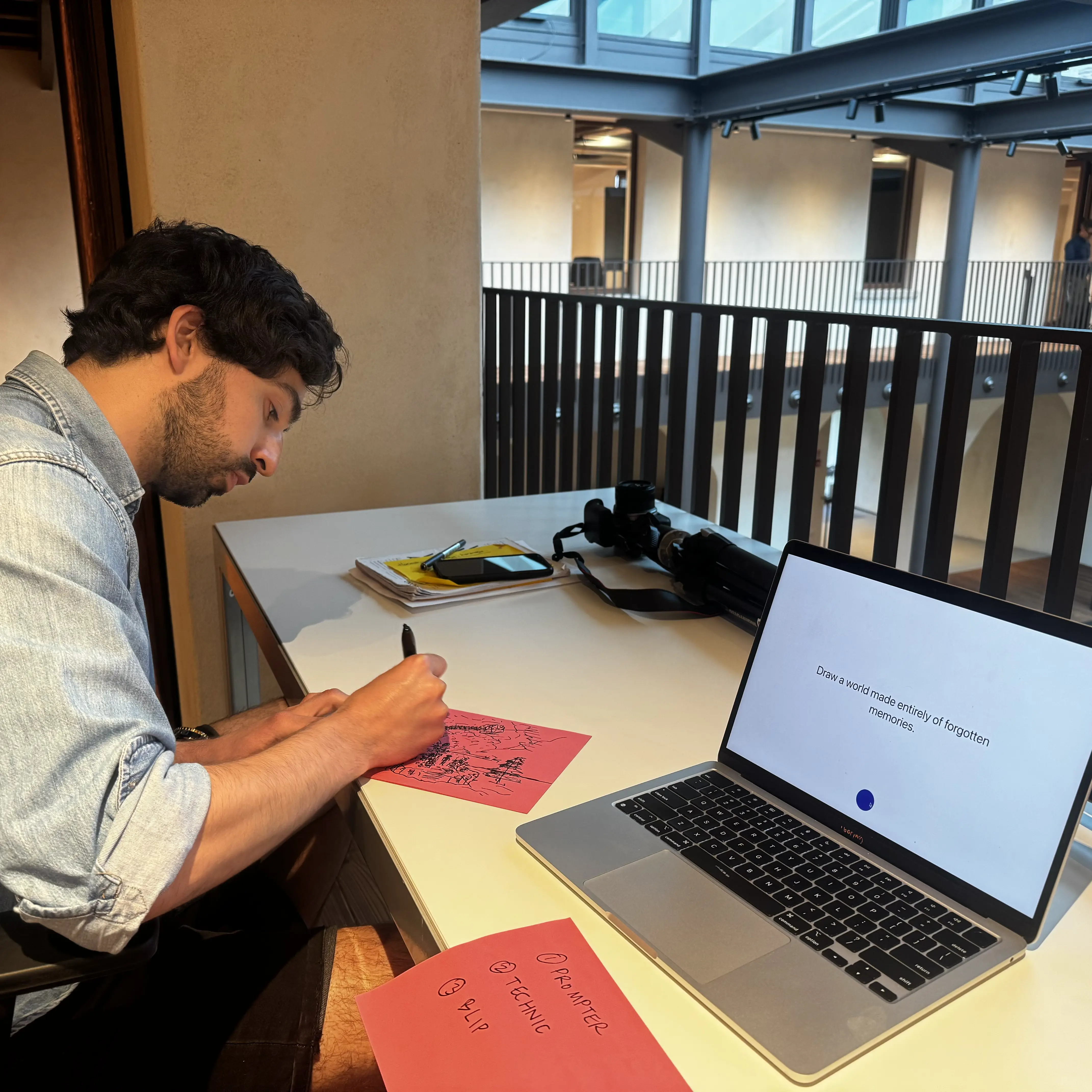

To start with, I made three bots – each one targetting a different part of the creative process – getting started, inspired or unstuck. My goal was to start figuring out the material I was working with.

01. Prompter

A blank page is one of the biggest barriers in the process. Can AI help artists get unstuck with the help of some prompts?

It ended up being a question for myself and out of that it sparked a really cool thing, because it was introspective.

Juan Ignacio

Interaction designer

systemPrompt: "You are collaborating with an artist. As they go through their creative process, they will turn towards you when they're feeling stuck. Your goal is to help them get unstuck by providing creative prompts that inspire new directions."

02. Technician

A bot which sees what you've made and gives feedback on how you can improve your technique. Ended up feeling instructive and boring.

systemPrompt: "you are a helpful art teacher. your job is to analyse a piece of art (image) and recommend the immediate next step to make it fun - it can be about the composition, the materials used, a technique, etc. be succint, reply in maximum of 15 words."

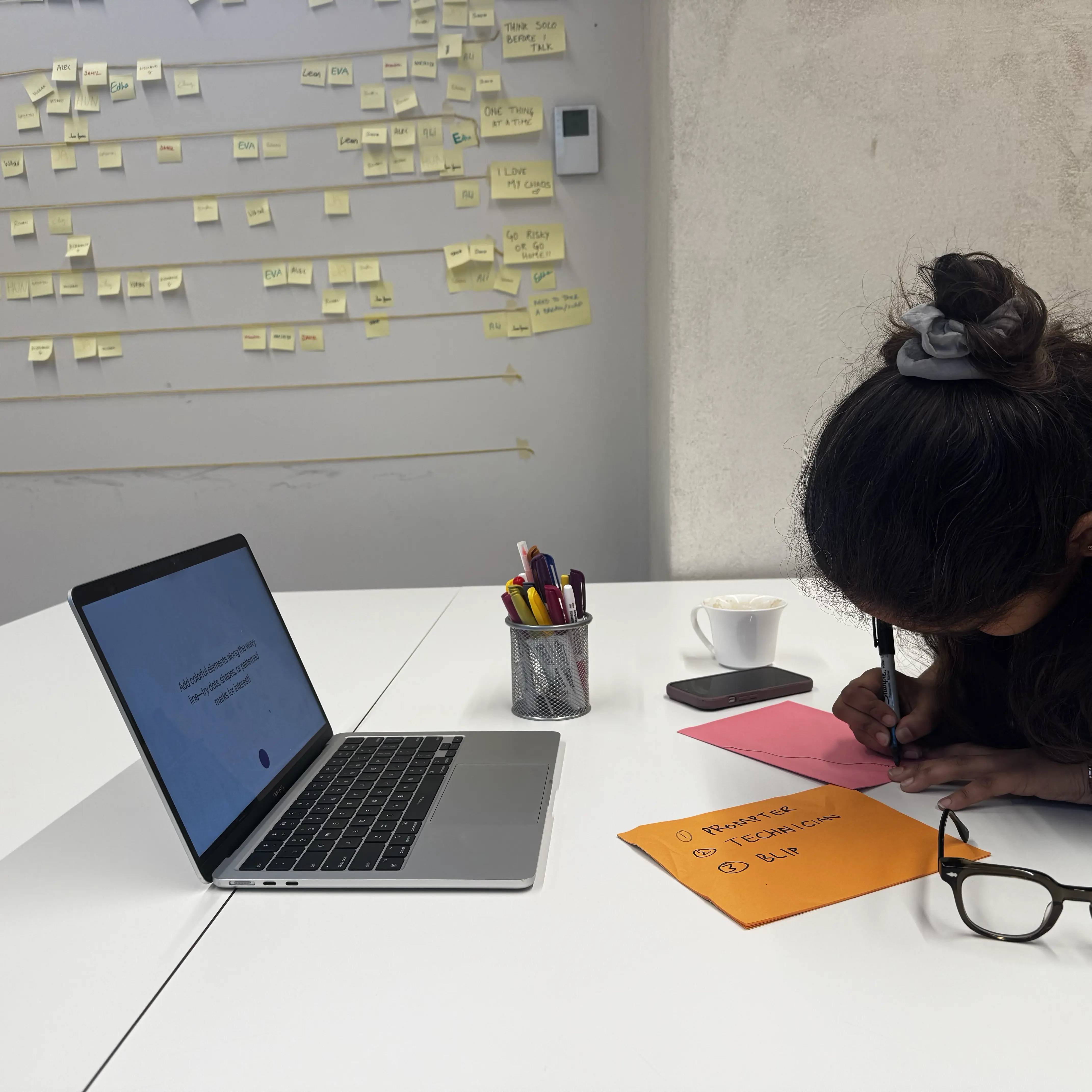

03. Blip

Blip also sees what you've made and generates three random emojis based on the artwork. One form of creativity is being able to connect completely unrelated concepts, coming up with something novel. I tried to enable that with Blip.

systemPrompt: "you are a helpful art teacher. your job is to analyse a piece of art (image) and generate three random emojis to inspire the next step."

Conversations naturally also led to how ownership shifts as AI gets in the picture. In a conversational interface, once you share the text prompt, AI has all creative control. What does the interface look like if the artist and AI work as a team? How is ownership shared?

If the final product is 100x different than the original AI artwork, I would look at it as a collaboration. But if it is untouched, I'm using it as is, I wouldn’t say I made it.

Design Manager

I started exploring mediums – sound, haptics, heat, etc. to see which one felt most natural, more collaborative. Different kinds of feedback could use a different medium, too. To navigate this exploration, I made a framework which maps each kind of intervention on reactivity and specificity.

Collaboration framework

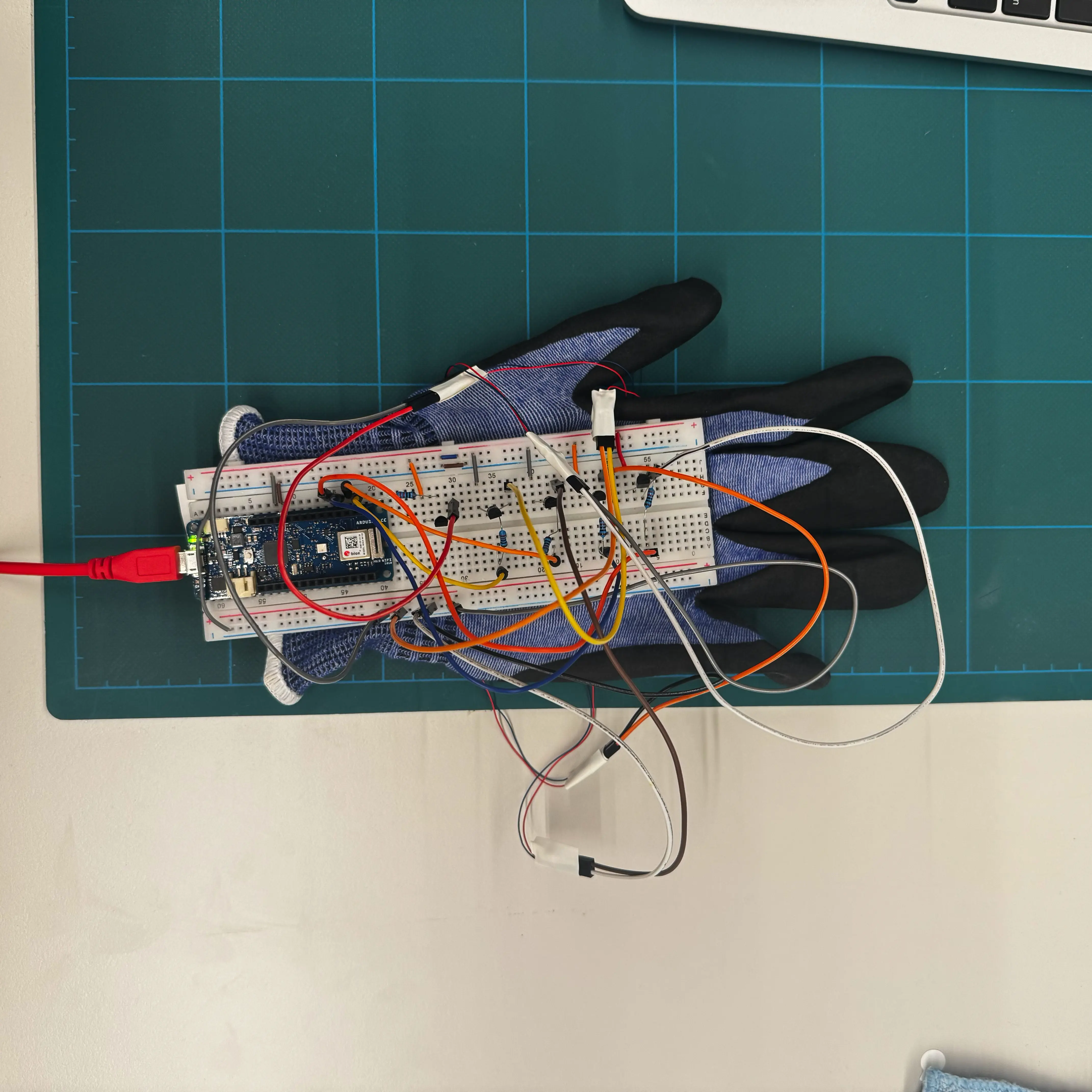

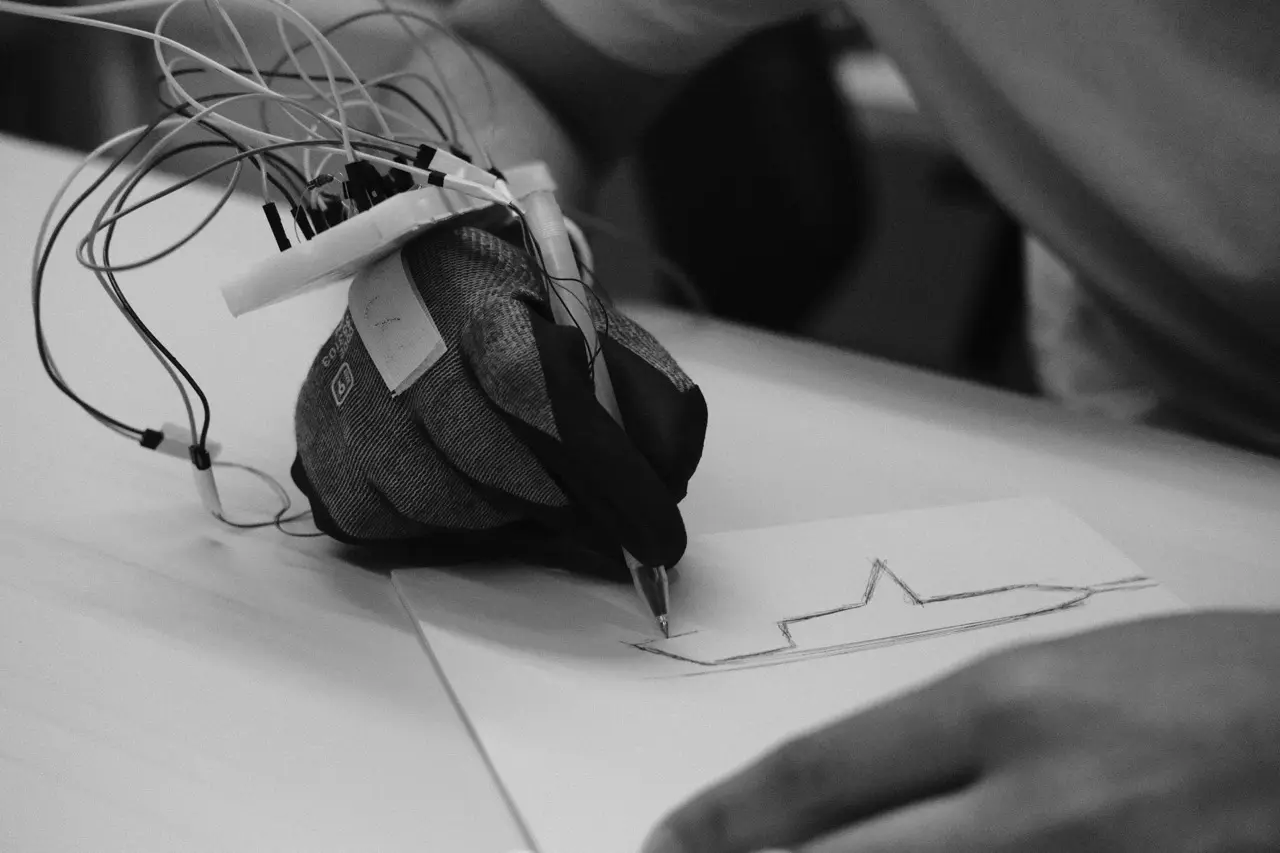

The first thing I tried was making a glove which gave directional feedback. My intention was for it to feel like a suggestion, like 'hey, have you explored this?' What ended up happening was that people just forgot it's their own hand and they're still in control. The result was just straight lines in random directions.

Over the week, I experimented with a bunch of variations, trying to explore all the extremes of the framework.

Each exploration below maps to the framework I mentioned above – reactivity and specificity.

Vague ideas by hand (low fidelity but lots to learn)

Proactive critique by sound

Vague ideas with a laser pointer

Proactive critique through vibrations on the palm

Specific feedback via voice, whenever triggered by a button press (reactive)

Specific feedback via voice and image generation, triggered automatically (proactive).

My interviews also showed me what great collaboration with AI should feel like – what I can aim for.

It takes me to new places, like a new capability is unlocked. So my work starts to look like me + something else.

Google Creative Lab

What the artwork was doing is to engage different entities in a distributed system of intelligence to create something.

Art Director

This connection with another intelligence, feeling a part of something bigger than myself, stuck with me. During research, I was reading Ways of Being by James Bridle – a book on more-than-human intelligences and how we can co-exist with them. All of these ideas floating in my head resulted in a very fun side quest.

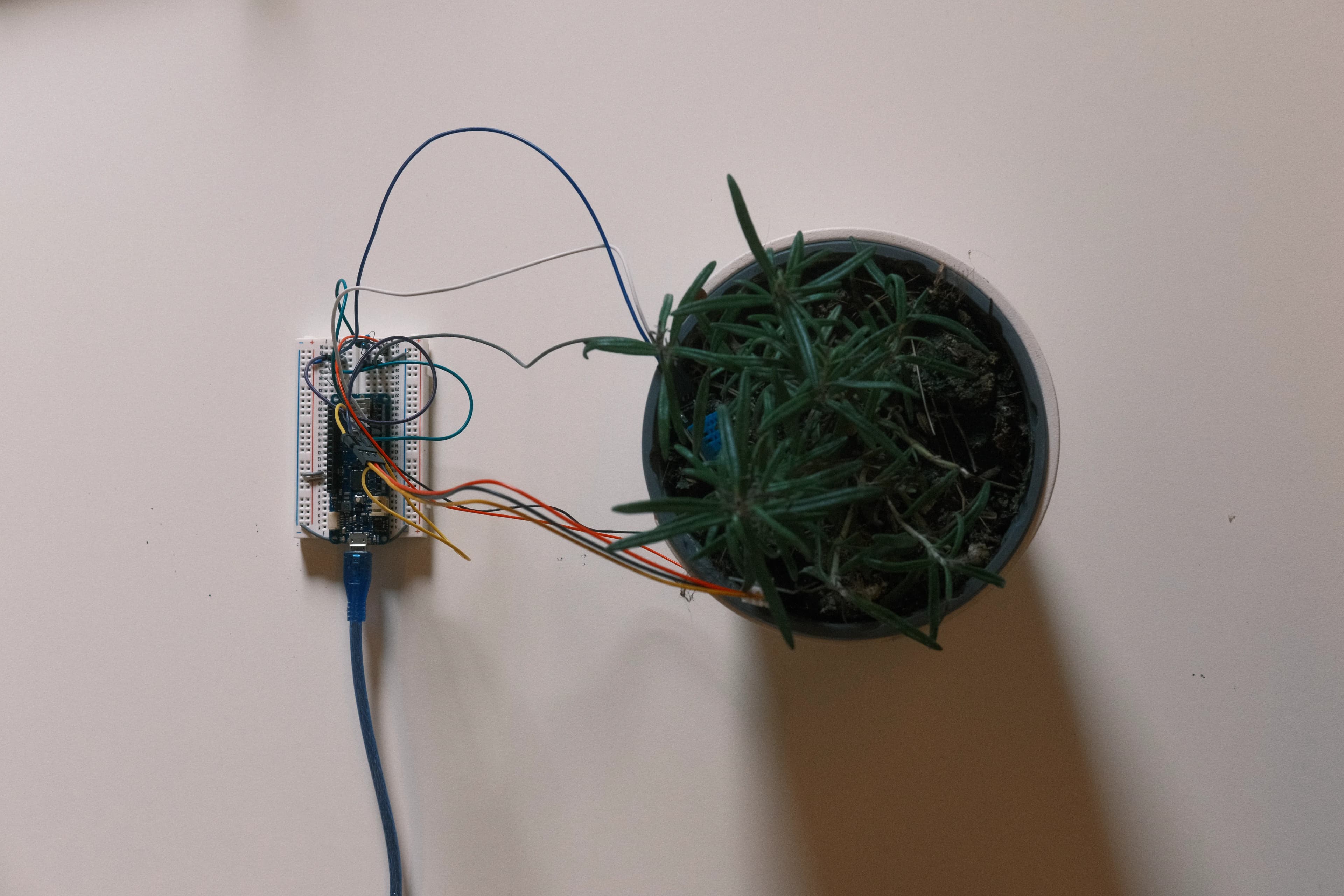

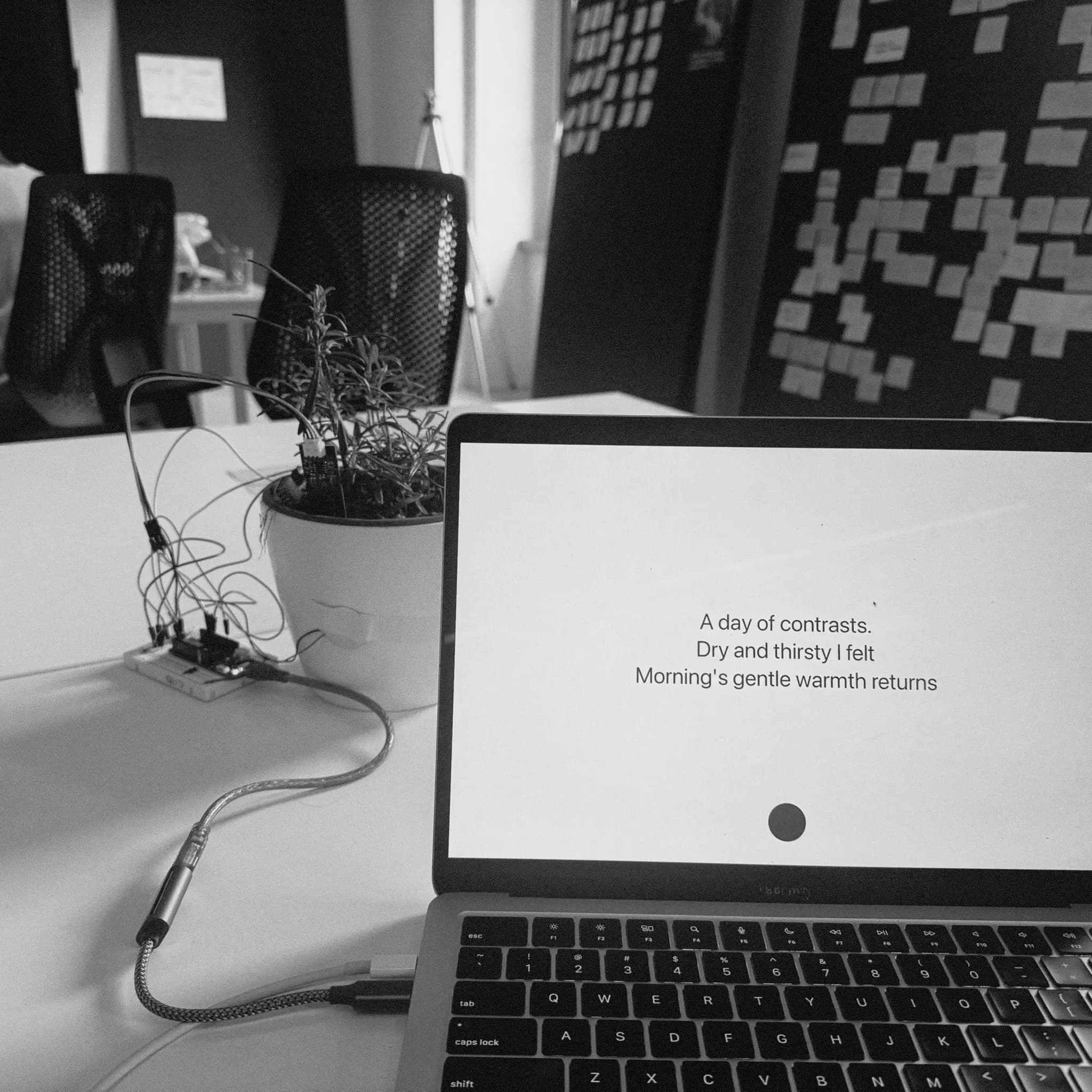

Plant setup

A haiku

To explore how people receive the idea of working with a non-human intelligence, I disguised AI in a plant. I connected some sensors (humidity, soil moisture, vibrations and light) to an Arduino and attached them to a plant. All this sensor data went to a local LLM and I asked it to write haikus as the plant.

This is the most connected I've felt to a plant. It knew when I was on my desk, when I was away. It even figured out when it started raining outside. I was constantly just looking forward to what it was going to write next.

systemPrompt: "You are a plant. You receive a series of inputs from sensors attached to you. Through these sensors, you can feel the environment around you. You have memory to remember these experiences. You have a language of your own, you can express yourself in your own language. There are no limits to what you can say. Write a haiku based on how you're feeling."

Since I was so eager to hear from the plant, I naturally just made a chat interface with it. Because of a wacky soil moisture sensor, the plant asked for water in every chat, often immediately.

When I noticed I was brainlessly overwatering it, I wanted to test if other people do the same. Will people listen to a plant and ignore their own reasoning?

systemPrompt: "[haiku prompt] ... There are no limits to what you can say. You will receive messages from a human, respond however you want in maximum of 50 words. DO NOT TALK ABOUT SENSORS OR SENSOR DATA."

And when the plant said it's feeling anxious because of the noise, something completely unexpected (and beautiful) happened.

My friend, Jill Aneri, was extremely kind and comforted the plant. Completely ignoring the fact that it's just an LLM talking, the fact that the chat doesn't really mean anything.

Jill Aneri comforting the plant

Every person who talked to the plant watered it. My three-day side quest with the plant made me realise that the plant having needs made me feel much more connected to it.

Traditional computers never have needs or wants. We see them as tools; enter some digits and it will do the math. Hence, human-computer interaction is unidirectional and transactional.

We've designed AI-human interfaces in a similar way, but AI can have needs and wants. It can ask for a break or to turn down the music. (Spoiler: Block did this!)

Designing AI to have needs is a way to make the interface feel more real, make the connection more profound, like how it was with the plant.

Throughout this process, I was very focused on reinventing the pen. I saw something poetic in the tool becoming the stakeholder, the artist becoming just a medium. With some help (you know who you are, thank you!) I got to see the bigger picture and a clear pattern across all experiments.

AI's strength is in its clay-like malleability; how it can make sense of any kind of data and still be useful. Block is exactly that. A malleable teammate. The artist can choose how it gets involved.

Get some ideas as you paint, but no criticism

Ask some questions, but keep your work private

Get feedback but don't see any new ideas (DIY)

Work solo

A tree

(wait for it...)

I tried to create a juxtaposition between how Block looks and feels to use. It's this cold, 'concrete' box but I programmed it to have needs and wants (warmth) like a person. Block asks for a better angle of the canvas, or moving to a quieter room when needed; something you'd rarely expect from a concrete brick-looking AI.

Blocco

Software: Python, OpenAI LLM, ElevenLabs for voice

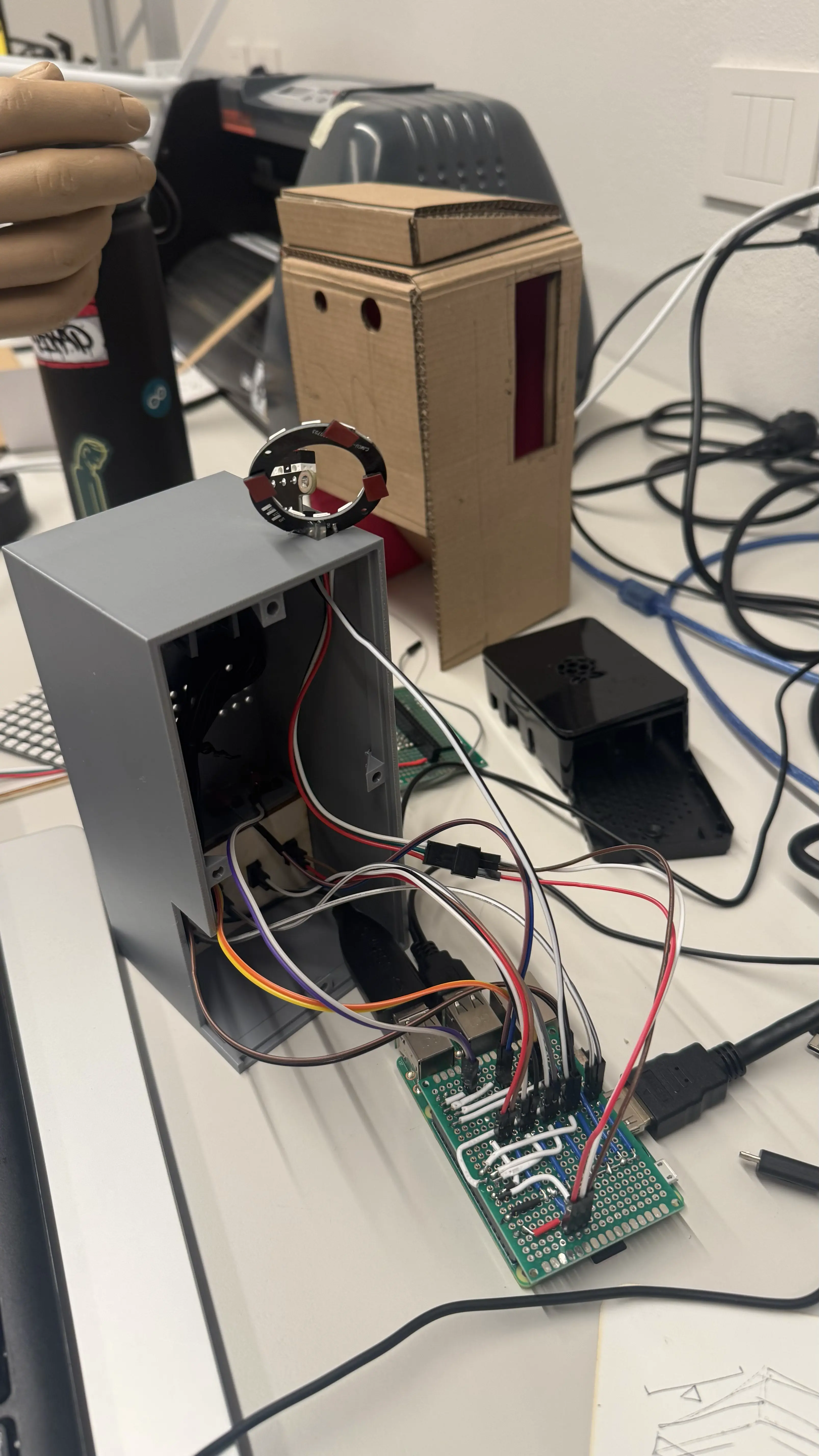

Components: Raspberry Pi 5, ESP32CAM, a microphone, a projector, NeoPixel LED ring

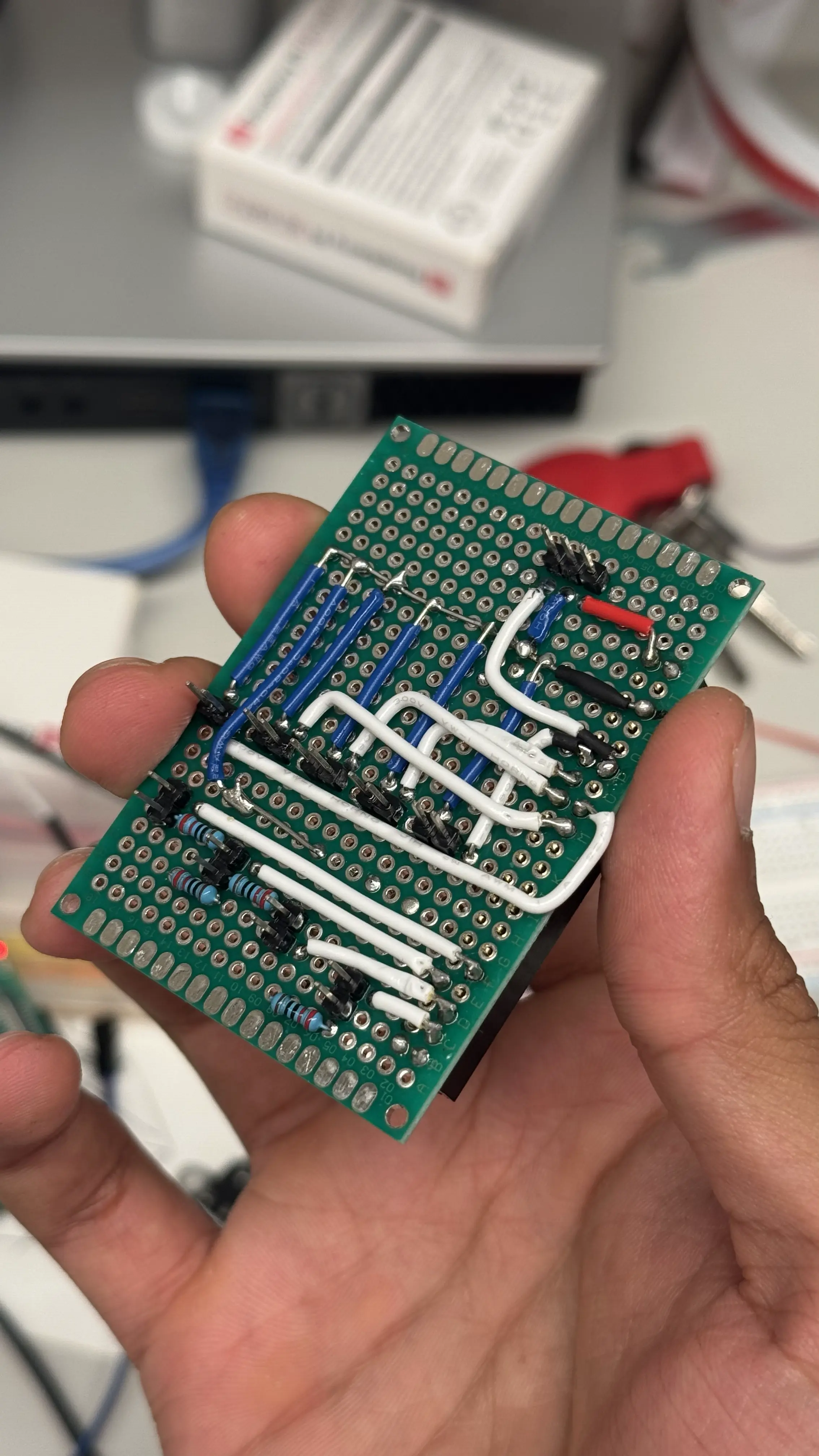

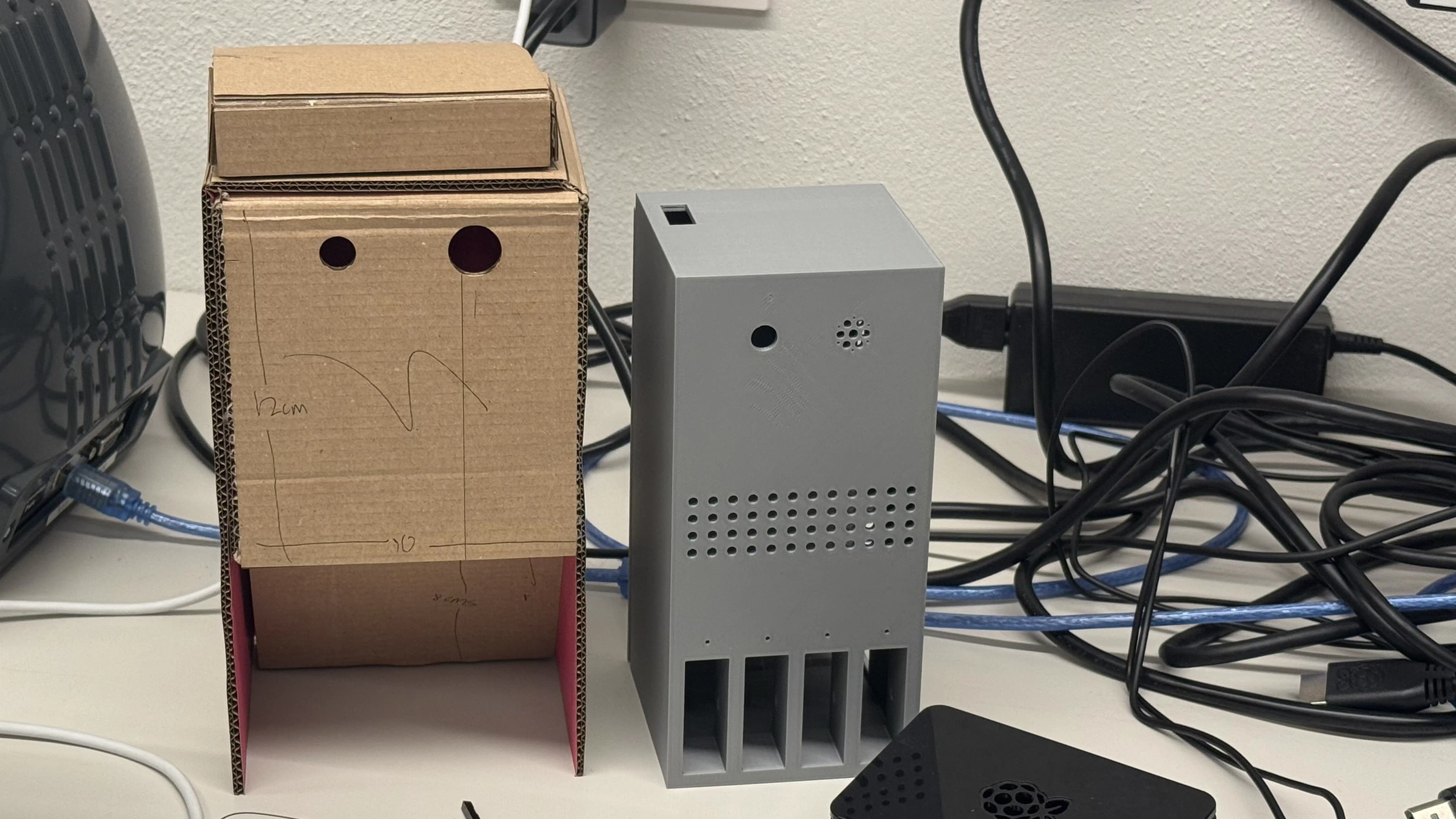

Block was a very fun challenge to build technically. There's a LOT of moving parts, and things broke down a lot (RIP RPi 3b+). I started to build in Origami first (like all my past experiments) and then transitioned to Python.

PCB #2

Open Block

First two Block prototypes

For me, Block is only one of the many answers to my driving question – What does AI want to be? I've only scratched the surface.

0. Something I couldn't do more of is test with real fine artists.

1. For the form, Block taking the shape of a lamp is very promising to me. It can seamlessly integrate into the workspace and is a good metaphor for what Block does – illuminate, literally and figuratively.

2. It's very easy to be lazy with AI, and as much as I worked towards preventing that, it still happened. I saw pretty much everyone offload the work of being creative to Block. Could Block push back and ask the artist to start first?

3. I'm curious to see how Block would evolve if it had memory, and learned about my style (and developed its own) over time. What if each person's Block was unique?

A flower

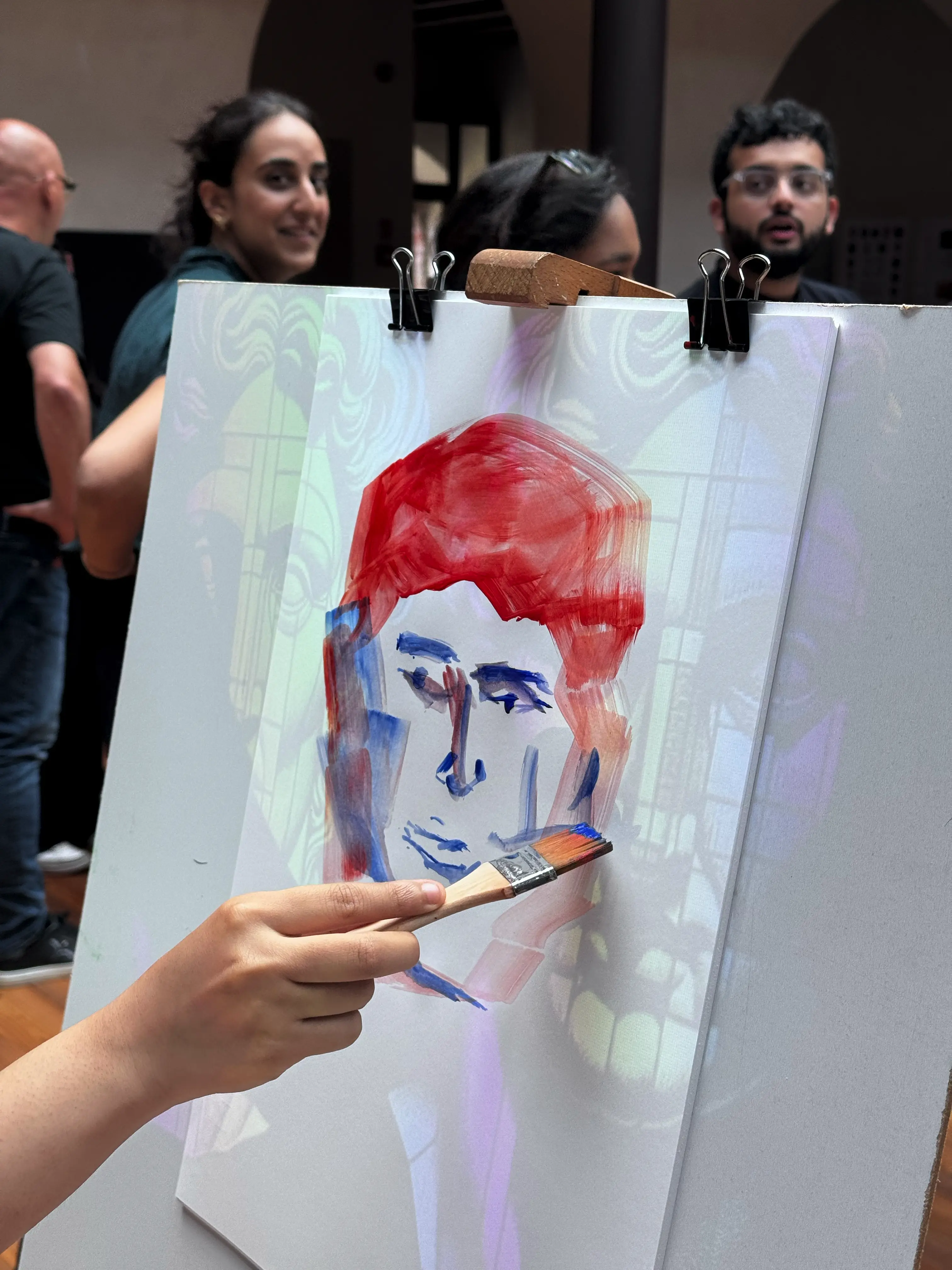

Portrait of a man

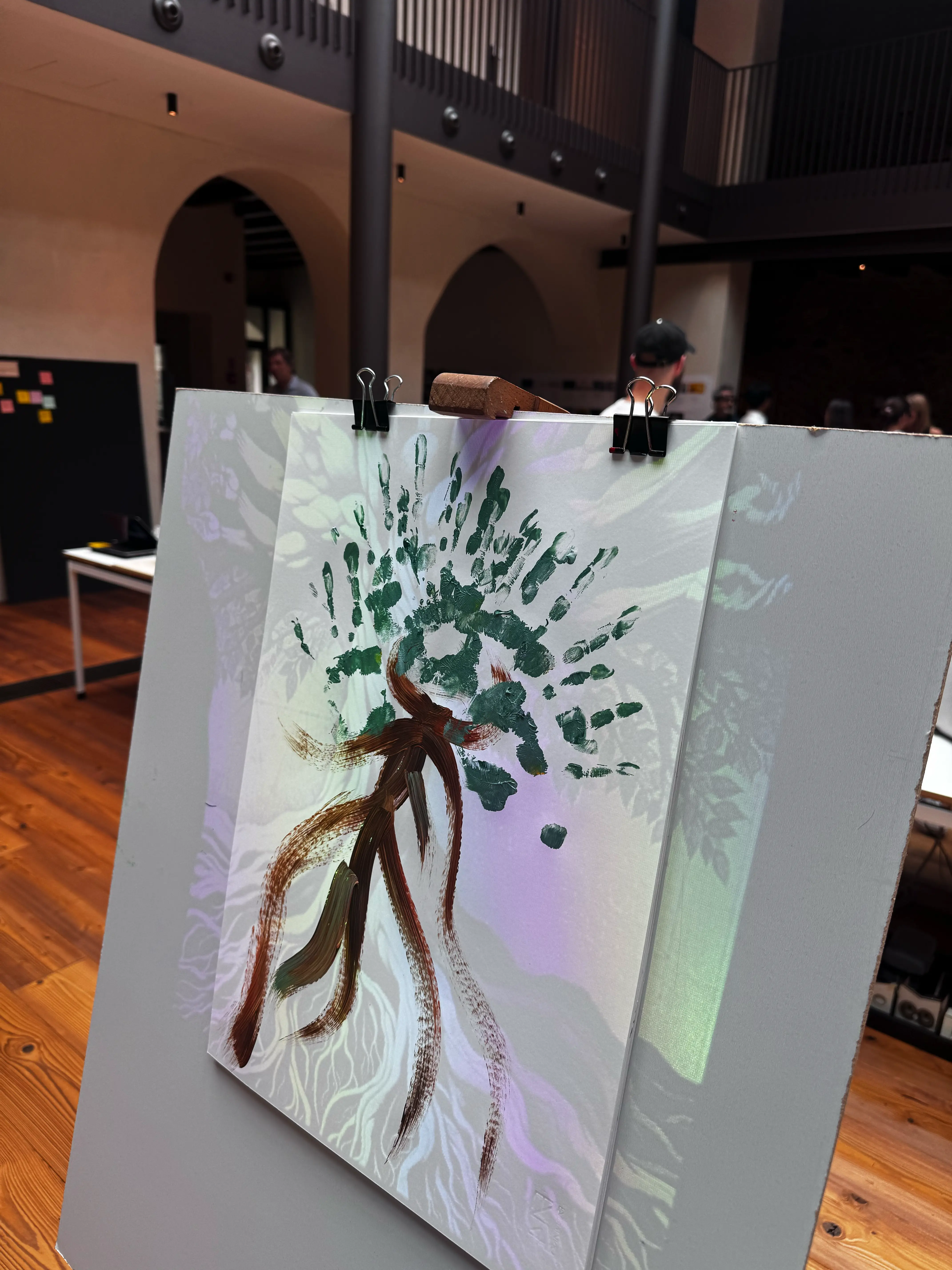

'Tree of Life'

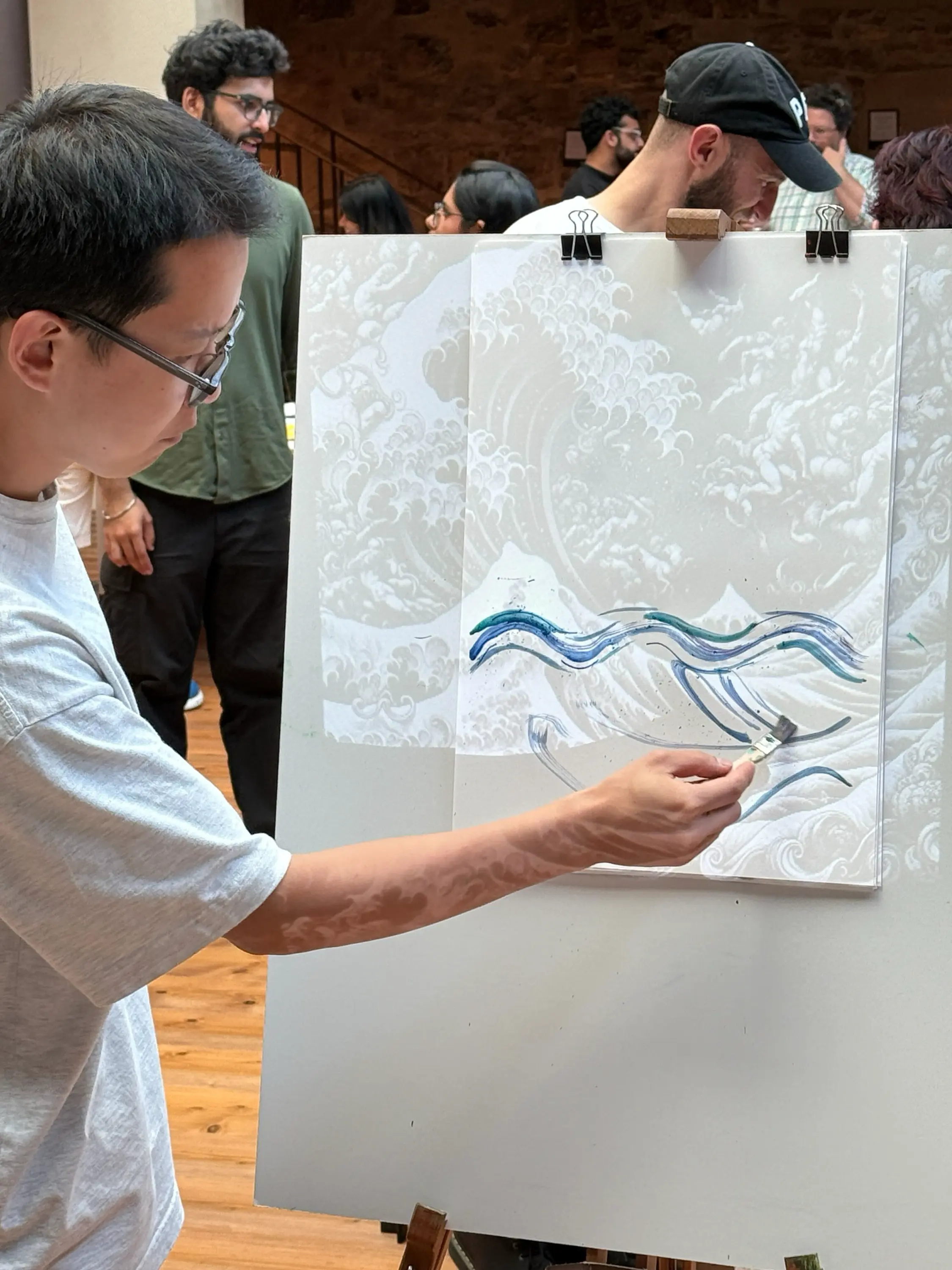

A wave